19.2. Weights & Biases - Sweeps#

19.2.1. Introduction#

Hyperparameter sweeps systematically explore combinations of training parameters, such as learning rate, batch size, and optimizer, to identify configurations that improve model performance.

Why Sweep Hyperparameters?

Small changes can have big impacts: Minor tweaks to hyperparameters can significantly affect training outcomes.

Gain insights: Sweeps help understand how different hyperparameters influence training dynamics.

Experiment at scale: Run one model on many datasets or many models on one dataset.

Automate and save time: Automating the exploration process is more efficient than manual tuning.

Tip

Weights & Biases (W&B) offers robust tools for managing and automating hyperparameter sweeps.

19.2.2. ResNet-50 Classification Example#

In order to cover the W&B sweep feature, we will go over an example from this GitHub repository, which is part of “Optimizing ML Workflows on an AI Cluster” workshop. This example demonstrates a simple classification task on the CIFAR-10 dataset using the ResNet-50 model.

Below is a directory-style tree view of the files used in this example, along with brief explanations for each file:

wandb_sweep/

├── config.yaml # Base training configuration (default hyperparameters)

├── sweep_config.yaml # Sweep definition: parameters to search and optimization method

├── sweep_submission.slrm # SLURM batch script to submit sweep agents to the cluster

└── wandb_sweep.py # Training script that reads config and logs results to W&B

The description of each file is listed in the following.

config.yamlContains the default hyperparameters and general configuration used during training.

Values here are overridden bysweep_config.yamlduring sweeps.sweep_config.yamlDefines the sweep strategy:

The script (

program) to executeThe search method (

grid,random, orbayes)The metric to optimize (e.g., validation accuracy)

The hyperparameters and value ranges to explore

sweep_submission.slrmA SLURM batch submission script used to launch sweep agents on an AI cluster.

This typically includes calls towandb agentand resource requests (CPU/GPU, memory, etc.).wandb_sweep.pyThe training script executed by each sweep run. It:

Reads hyperparameters from W&B config

Trains the model using those parameters

Logs metrics and artifacts to W&B for tracking and comparison

In the following sections, we cover each step in detail.

19.2.3. Step 1: Define the Sweep#

The first step in setting up a W&B sweep is to define the sweep configuration.

This is typically done using a sweep_config.yaml file. The key elements of sweep_config.yaml are:

Program to run: The training script to execute (e.g.,

wandb_sweep.py)Search method: Choose from:

grid: tests all combinationsrandom: samples combinations at randombayes: uses Bayesian optimization for smarter search

Metric to optimize: Define the goal and metric name (e.g., maximize validation accuracy)

Parameters to sweep over: List the hyperparameters and their values to explore

# sweep_config.yaml

program: wandb_sweep.py

method: grid # other options: bayes, random

metric:

goal: maximize

name: Validation Accuracy

parameters:

learning_rate:

values: [0.1, 0.01]

Note

Use config.yaml for fixed parameters not being searched. Values in sweep_config.yaml will override those in config.yaml during the sweep.

19.2.4. Step 2: Initialize the Sweep#

Once the sweep configuration is defined, the next step is to initialize the sweep. W&B uses Sweep Controllers to manage and orchestrate sweeps.

From within your (e.g., mamba) environment, run the following command:

wandb sweep --project project_name sweep_config.yaml

Replace project_name with the name of your W&B project. After running this command, W&B will perform the following tasks.

Register the sweep with your account

Return a sweep ID, which uniquely identifies the sweep

Allow you to launch one or more sweep agents using this ID in the next step

# Sample output

wandb sweep --project test_project sweep_config.yaml

wandb: Creating sweep from: sweep_config.yaml

wandb: Creating sweep with ID: 3r3sv8tg

wandb: View sweep at: https://wandb.ai/mxshad/test_project/sweeps/3r3sv8tg

wandb: Run sweep agent with: wandb agent mxshad/test_project/3r3sv8tg

19.2.5. Step 3: Run Sweep Agents#

Once the sweep is initialized and you have a sweep ID, the next step is to run sweep agents.

Sweep agents are processes that:

Connect to the W&B sweep controller

Fetch a set of hyperparameter values from the sweep

Run the training script with those values

Report results back to W&B

Each agent runs one experiment from the sweep. To parallelize experiments on an AI cluster, you can launch sweep agents using a SLURM array job. For example:

#SBATCH --array=1-12%2

This directive launches up to 12 jobs, with a maximum of 2 running concurrently (read more here).

Inside the SLURM batch script, run the agent command:

wandb agent --count 1 your_entity/your_project/your_sweep_id

Replace your_entity, your_project, and your_sweep_id with your actual W&B account name, project name, and the sweep ID you received in Step 2.

Note

Set one sweep run per array job ID by using the --count 1 flag.

19.2.6. Step 4: Sweep Visualization#

You can explore and compare your sweep runs using the W&B App UI. Just open your project, click the Sweeps (broom) icon in the sidebar, and select your sweep from the list.

19.2.6.1. Key Sweep Visualizations#

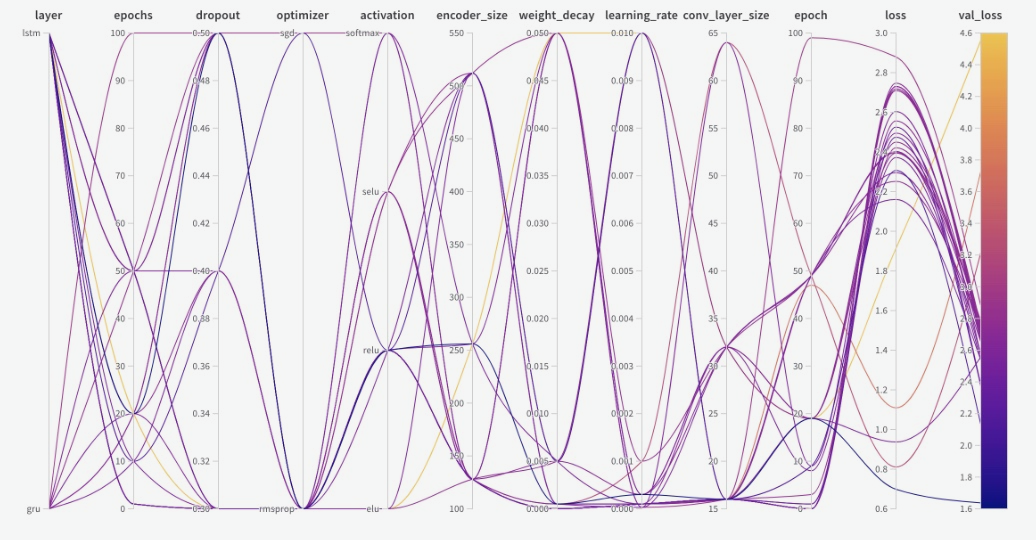

Parallel Coordinates Plot: This chart provides a quick overview of how different hyperparameter values relate to model performance. Each line represents a sweep run, showing how its specific parameter values align across multiple axes and relate to the final metric. It helps spot patterns and trade-offs between parameters.

Parameter Importance Plot: This plot ranks hyperparameters based on how strongly they influence your target metric. It helps identify which parameters had the greatest impact on performance, guiding future tuning efforts.

Note

Correlation measures the linear relationship between a single hyperparameter and a performance metric, while Importance tells you how much a hyperparameter influenced the model’s performance, regardless of whether the effect was linear or nonlinear.

Note

You can learn more about these visualizations in the W&B documentation.

19.2.7. Note on Mapping Sweep Runs to SLURM Array Jobs#

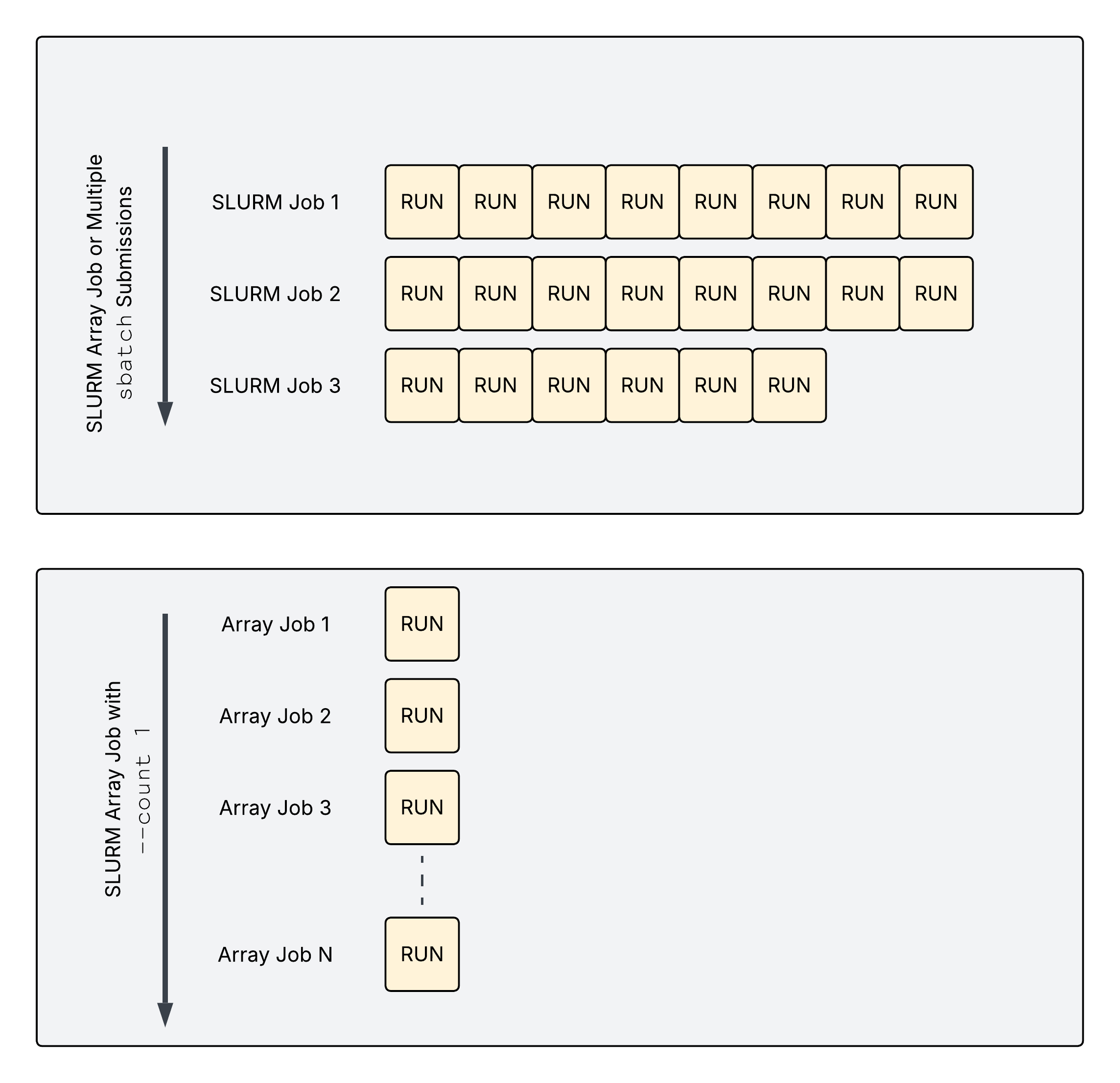

The way you configure your SLURM jobs determines how sweep runs are distributed. The diagram below illustrates two different execution patterns when running wandb agent.

The top execution pattern shows multiple runs per task in an SLURM array job (no --count 1 flag). In this setup, each task within the SLURM array job (or individual submission) runs multiple sweep runs. This happens when you omit the --count 1 flag in your wandb agent command. Each job will continue pulling new runs from the sweep controller until the sweep is complete or the job is stopped.

Good for maximizing GPU/CPU utilization

Harder to map specific sweep runs to array job IDs

The bottom execution pattern shows one run per task in an SLURM array job (--count 1). When using wandb agent --count 1, each task within the SLURM array job executes exactly one sweep run. This creates a 1:1 mapping in SLURM array job IDs and W&B sweep runs.

Easier to track and debug individual runs

Enables fine-grained resource control (e.g., job timeouts, logs)

Requires more job submissions for large sweeps

Note

Use --count 1 if you want each array job to handle a single, isolated hyperparameter configuration from the sweep.

19.2.8. Cross-Run Live Loss Averaging#

In this section we focus on computing cross-run averages of training and validation losses in real time during W&B hyperparameter sweeps. Workers publish per-epoch losses to a shared Redis server; aggregators compute averages and publish them back so all workers log synchronized Train Loss Average and Validation Loss Average metrics to W&B. For the complete workflow follow this example.